- AI Confidential

- Posts

- Understanding Data Complexity

Understanding Data Complexity

Exploring why RAG isn't dead, the importance of data classification, and the dangers of unchecked data exhaust.

Welcome to AI Confidential, your biweekly breakdown of the most interesting developments in confidential AI.

Today we’re exploring:

Why RAG isn’t dead (it’s just evolving)

The color system of data classification

Emerging open source projects worth checking out

Also mentioned in this issue: John Willis, Mark Russinovich, Nelly Porter, Michael Reed, James Kaplan, Ravi Kuppuswamy, Daniel Rohrer, Jason Clinton, Teresa Tung, Vijoy Pandey, Nataly Kremer, bigID, DeepInstinct, Upguard, ServiceNow, Bloomfilter, Anthropic, AGNTCY, and Crawl4AI.

Let’s dive in!

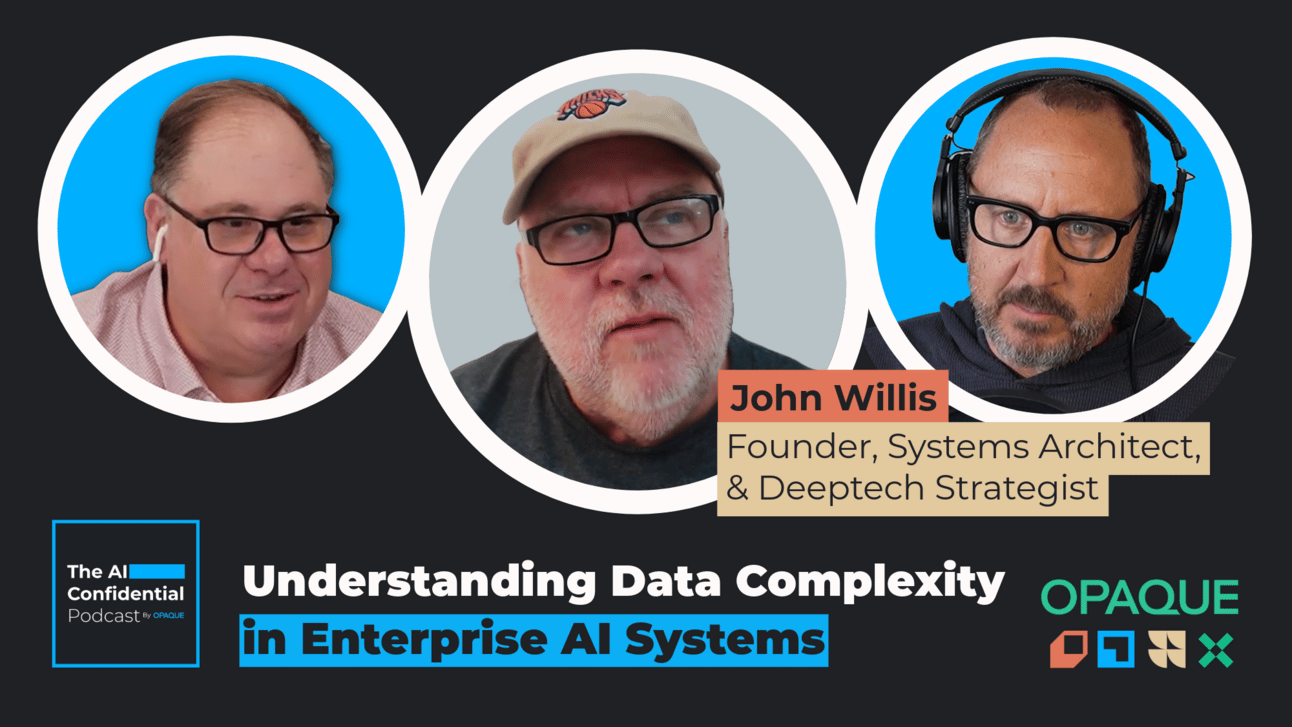

This week on the AI Confidential Podcast, we’re tackling an overlooked challenge in enterprise AI—governance by design.

As AI systems become more embedded in business workflows, bolt-on compliance policies and afterthought oversight aren’t cutting it.

Instead, governance has to be intentional, architectural, and built into core systems from Day 1.

Which is the key message podcast guest John Willis, author of Rebels of Reason and The DevOps Handbook, wants you to understand.

One of the biggest threats he’s flagging?

Data exhaust.

(Which is unstructured and often sensitive information your systems generate behind the scenes).

From manufacturing process details to AI model configurations, this invisible data trail is growing fast—and most companies aren’t tracking it.

That’s why John sees data classification as the bedrock of AI governance, using a color-based framework when he consults with enterprise clients:

Blue data is non-sensitive and often public, like published research or white papers

Yellow data is metadata that may contain competitive advantages, like workflow patterns and assembly line details

Red data is highly sensitive and must never leak, like proprietary information and internal strategies

Knowing the difference helps teams make smarter decisions about what data is used, where it lives, and how it is secured—all of which are essential for protecting the brand.

Also in this episode, you’ll also hear John unpack:

Why legacy systems and tech debt still haunt AI adoption

Why RAG isn’t dead—and where it still delivers real impact

How understanding architecture’s past helps future-proof AI systems

How the NORMAL stack embeds AI governance into every layer of the enterprise

We loved getting to talk shop with John, and were super honored to have him speak as part of yesterday’s pre-Summit workshop at the Confidential Computing Summit.

The episode is live now. Give it a listen here.

Keeping it Confidential

In 2024, what percentage of breaches involved data that was not properly classified?

Less than 5%

12%

20%

35%

See the answer at the bottom.

Code for Thought

Important AI news in <2 minutes

🔒 69% of organizations claim AI-powered leaks are a top concern in 2025, yet 47% have no AI-specific security in place, a bigID report finds.

📉 Only 6% of organizations have an advanced AI privacy strategy currently in place, the same report found, leaving them vulnerable to leaks.

📤 A Russian AI chatbot leaked the personal data of over 570 Canva creators online, UpGuard found.

🎭 More than 45% of enterprises saw an uptick in targeted phishing and deep fake impersonations this year, according to a recent DeepInstinct study.

🛡️69% of cybersecurity experts say that while AI has been a boon for their teams, it has also contributed to SecOps burnout, the same study reports.

Community Roundup

Updates involving OPAQUE and our partners

Day 1 is officially underway! OPAQUE’s 2025 Confidential Computing Summit has kicked off in San Francisco, bringing together the leaders shaping the next era of secure enterprise AI.

🗓️ Here’s what’s happening today:

🎤 Keynotes from:

Mark Russinovich, CTO, Deputy CISO and Technical Fellow, Microsoft Azure

Nelly Porter, Director of Product Management, Google

Michael Reed, Senior Director of Confidential Computing at Intel

James Kaplan, McKinsey & Company Partner and CTO of McKinsey Technology

João Moura, CEO of CrewAI

🗓️ Coming up tomorrow:

🎤 Talks from:

Ravi Kuppuswamy, SVP of Server Solutions at AMD

Daniel Rohrer, VP Software Security, NVIDIA

Jason Clinton, CISO, Anthropic

Teresa Tung, Senior Managing Director at Accenture

OPAQUE in the press

⚡️ OPAQUE is picking up momentum, and our team is absolutely buzzing ⚡️

Today, at our Confidential Computing Summit, we shared the launch of our Confidential Agents for Retrieval-Augmented Generation.

(It’s RAG with turnkey workflows built on NVIDIA NeMo guardrails for LangChain’s agent framework, LangGraph).

Unlike traditional agent frameworks, OPAQUE Confidential Agents provide infrastructure-level confidential guarantees, designed to:

⭐️ Secure every interaction

⭐️ Ensure real-time compliance

⭐️ Deliver complete auditability

⭐️ Enable multi-agent collaboration

OPAQUE in the wild

Today, OPAQUE announced that we have officially joined AGNTCY, and we couldn’t be more excited.

Created as an open-source collective for inter-agent collaboration, AGNTCY is a space to help innovate, develop, and maintain software components and services for agentic workflows.

🗣️ Here’s what Vijoy Pandey, GM and Senior Vice President, Outshift by Cisco, had to say:

"Before multi-agent software can truly become embedded in critical processes, we need verifiable privacy, data sovereignty, integrity, and runtime guarantees so organizations can safely scale AI ecosystems without duct-taping manual review at every step. That's why we're welcoming OPAQUE as a member in AGNTCY. They help solve the trust infrastructure problem we're all going to hit as we build the Internet of Agents together.”

We’re super hyped to join great companies like Cisco, CrewAI, LangChain, and Galileo as supporters of this project.

Stay tuned for the next episode of the podcast, where we talk about our partnership with AGNTCY with Vijoy later in July!

Partnering with OPAQUE

ServiceNow is reimaging how businesses use AI—and that includes working with OPAQUE, Microsoft Azure, and NVIDIA.

Before, ServiceNow struggled to respond quickly to requests from their sales desk due to the volume and sensitive nature of the data.

Since partnering with OPAQUE, the results speak for themselves:

✅ Answers get delivered 99% faster

✅ Response times went from 4 days to 8 seconds

✅ They increased output by 41%

✅ They reduced costs by 56%

ServiceNow is just one example of the power of confidential agentic AI—and the future is only looking up.

Open source spotlight

🕷️ Crawl4AI is an open-source tool developers can use to scrape data from the web to train emerging LLMs and agents.

💬 Microsoft’s NLWeb simplifies the process of building conversational interfaces for websites, allowing the same natural language APIs for both humans and agents.

🌍 The Linux Foundation is hosting its annual Open Source Summit in Amsterdam, the Netherlands, from August 25 to 27, where open source developers can collaborate and network.

Quotable

🤖 “As AI becomes a powerful cyber shield and a potential attack vector, security leaders must evolve their thinking and tooling to match.”

— Nataly Kremer, Chief Product Officer at Check Point Software, for the World Economic Forum

Trivia answer: 35%

According to IBM, shadow data (AKA data that is not properly classified) was responsible for 35% of breaches in 2024. What’s worse is breaches involving shadow data took 26.2% longer to identify and 20.2% longer to contain—truly living up to its evil-sounding nickname.

Stay confidential!

- Your friends at OPAQUE

ICYMI: Links to past issues

How'd we do this week?Vote below and let us know! |

Reply